If you are interested in AI (Artificial Intelligence), you may not need an introduction to chatGPT. You have probably already been able to test “the beast”.

Before this chatbot invaded the Web3, you could still use it freely.

From now on, you will have to pay for it.

The age of AI is upon us, and whether it’s chatGPT, Google Bard or others, it will become obvious in the coming years to turn to these tools to make our lives make.

In the medical field, we can imagine the following scenario: you are sick and rather than wasting time trying to find a doctor, make an appointment and then go to the pharmacy, you decide to turn to chatGPT.

Finally, you find the answer given convincing and you manage to get an “alternative” treatment.

The conclusion of all this being that you have saved time with AI for a situation that does not require you to turn to a health-care professional.

ChatGPT info

With a bit of hindsight, it is true that chatGPT has some very interesting features.

It is part of this form of AI capable of generating targeted information from a query.

ChatGPT has been tested to determine the quality of diagnoses made on patient case studies.

But let’s temper our words a bit.

First of all, let’s remind that chatGPT is not an AI, in the strict sense of the term.

Although it is often referred to as a “conversational” AI, chatGPT is not an AI and cannot be considered a medical AI.

Therefore, it will not replace your doctor.

In this regard, we will see that the solution proposed by Galeon is the future of medical AI and more generally, of health.

The “birth” of chatGPT, operation and versions (GPT-3)

ChatGPT is not an AI

In reality, it is a combination of AI tools.

ChatGPT is a chatbot tool created by the company Open AI and launched in November 2022, in its most advanced version.

It uses AI to sort through a multitude of data validated or not by individuals. It is the combination of all these tests that allows chatGPT to provide you with a final answer.

It relies on supervised learning (from existing knowledge) and on so-called reinforcement learning (conclusion from experiments).

The different versions of chatGPT :

- GPT-1, launched in 2018, is a light version of the current tool, proving the operation and future potential on a wider panel of data;

- GPT-2, launched in 2019, a version with more parameters added, more data ;

- GPT-3, the latest one, which demonstrates an impressive ability to answer ever more complex queries, ever more quickly and in structured text form.

Why chatGPT, Google Bard and others, will not replace your doctor

What chatGPT lacks in terms of AI

As mentioned before, chatGPT answers your questions from its “learning”.

However, this does not take into account sensitive patient data, or even the emotional or relational aspect that the doctor may have with his patient when he performs a consultation.

? Typically, ChatGPT will not ask you (at least, for now), your pain feeling on a scale of 1 to 10. Now, each patient holds his or her own vision of pain. The feelings are not the same.

What Watson, Tay and other AIs were missing

ChatGPT is not there yet, and for the other AIs that have tried before, the results are rather disappointing.

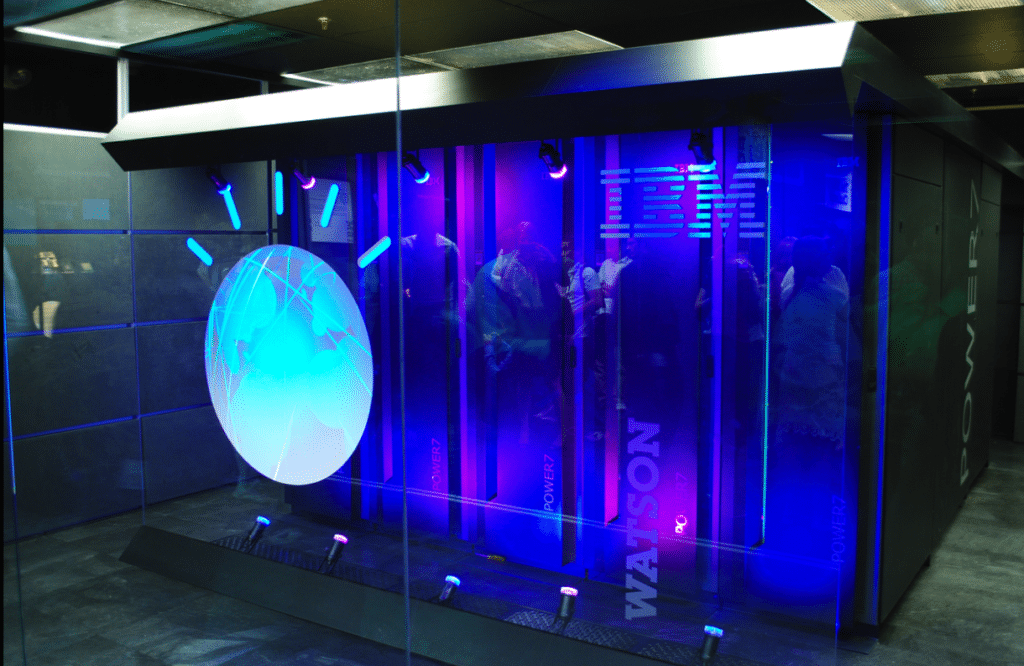

The case of IBM Watson

One example is the AI program IBM Watson, designed by the company IBM in the late 1990s.

Initially developed as a conversational tool, the program failed several tests, including the Turing test. It is then found to be potentially useful in the medical field.

Unfortunately, the limits of this medical AI were quickly seen, as the data was limited to the circle of studies and reports from professionals in the field.

However, a number of these published studies lack objectivity and controls.

This will imply later on, the questioning of the answers given by Watson.

The Tay case

There was also the story of Tay, developed by Microsoft on the Twitter network (2016).

Without going into details, we quickly realized the limits of this program considering all the drifts that could occur.

With very little control, the chatbot’s responses backfired, inflicting a curtain call.

As a conclusion of all these experiences, we realize how important it is for this type of AI to be supplied with quality data, at the source (raw structured data).

And we can tell you, this is exactly the vision adopted by Galeon in terms of medical AI.

Galeon, the reference AI in medical matters

Galeon is without a doubt, the reference for the years to come inmedical AI.

their consultations or the creation of patient files.

Galeon is also medical AI that will generate very precise answers thanks to a structured database and blockchain swarm learning (a form of machine learning).

In this dynamic, it is certain that Galeon will support the decisions of health professionals.

Where chatGPT or any other similar form of AI will be limited to mere avenues of thought based on more or less shaky data and outside the realm of patient data, which is far too sensitive.

However, without this, it is impossible to envisage a serious approach to any medical assistance from AI.

0 comments